Company

Stitch Fix

Role

Lead Product Designer

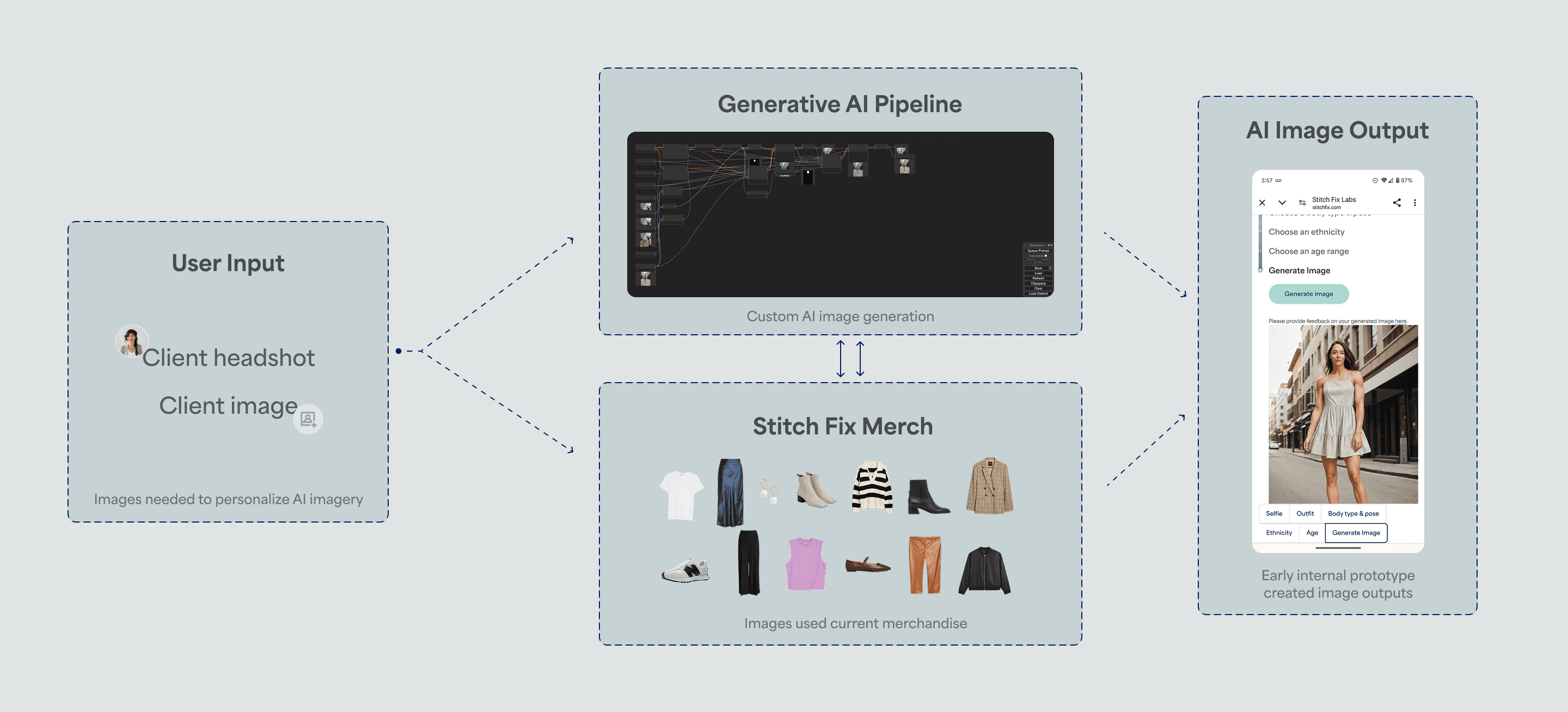

Our first goal: Build an AI Pipeline to start generating images we could test with

We partnered with an agency to build the backend and refine photo quality. Early testing of outputs helped us understand what we would need from users—from photo inputs to contextual details—to deliver realistic results.

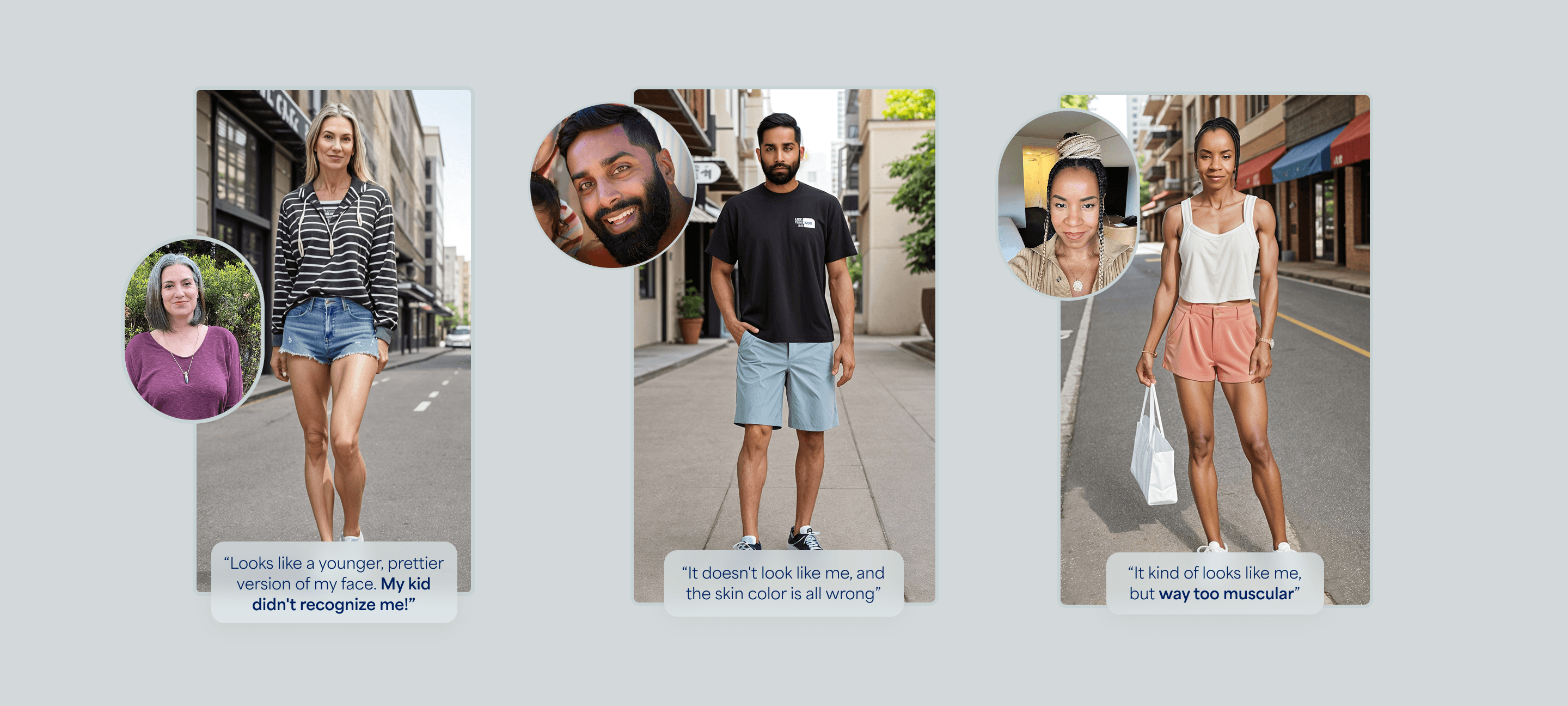

Early user testing showed problems in the AI model that were off-putting to users

We prioritized testing our image quality as often as possible, and our first round of user testing showed us that we had a lot more work to do on the image quality before this technology could be production-ready.

We were seeing problems the model making everyone look skinnier, more muscular and taller then they actually were. The AI was also hallucinating badly when it came to clothing, which we know was a crucial part of this experience.

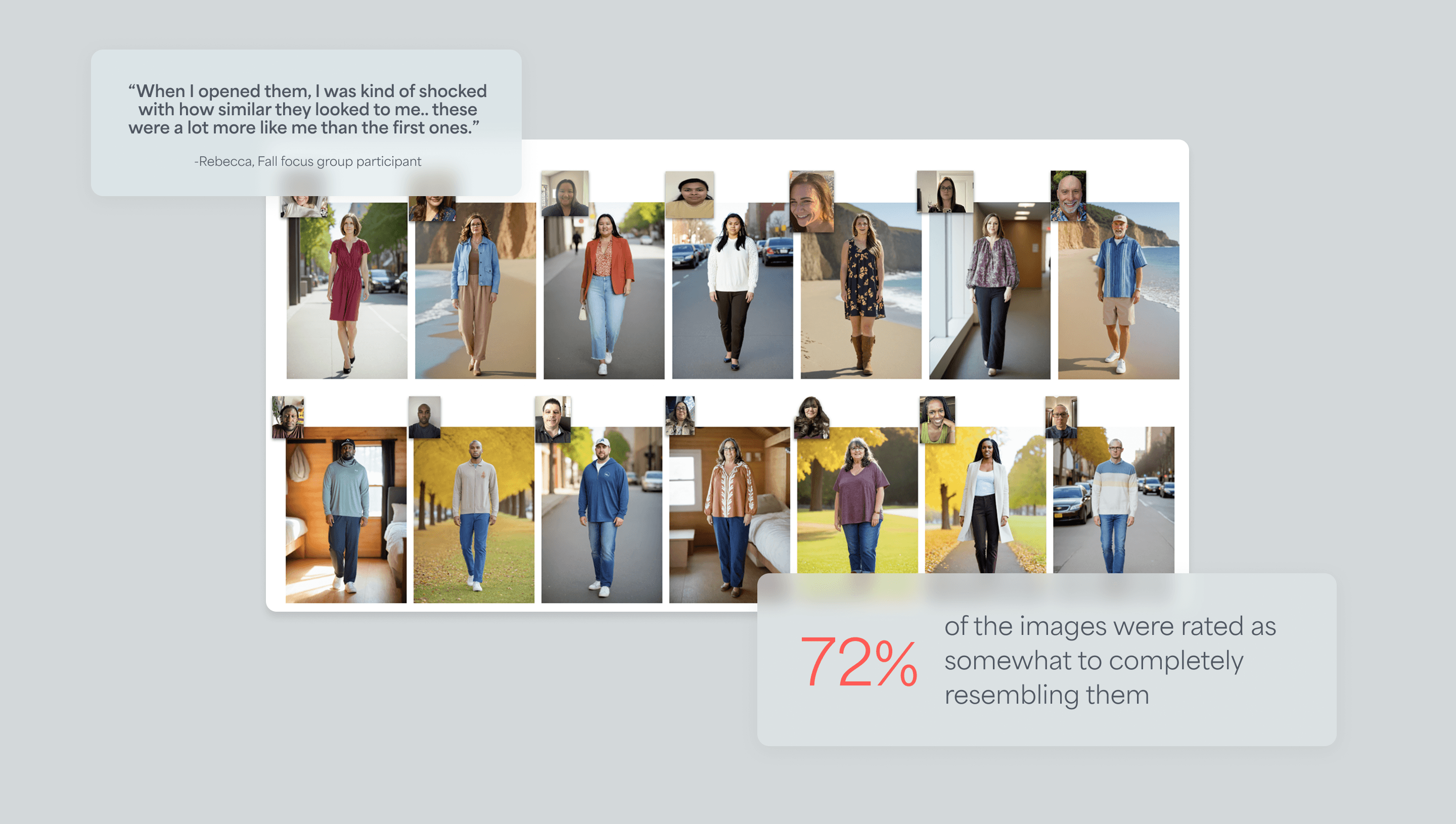

Once we had improved image quality, we gathered a diverse focus group to hear first-hand what our clients thought about our the images, the outfits, and AI in general

It's always such a privilege to talk to clients and potential users in a small group setting. For this focus group, we again shared personalized imagery with the groups and discussed their thoughts on using AI imagery to shop.

We even brought back some of the same participants from our first round of testing, to hear what they thought about the images now that we had improved the image quality!

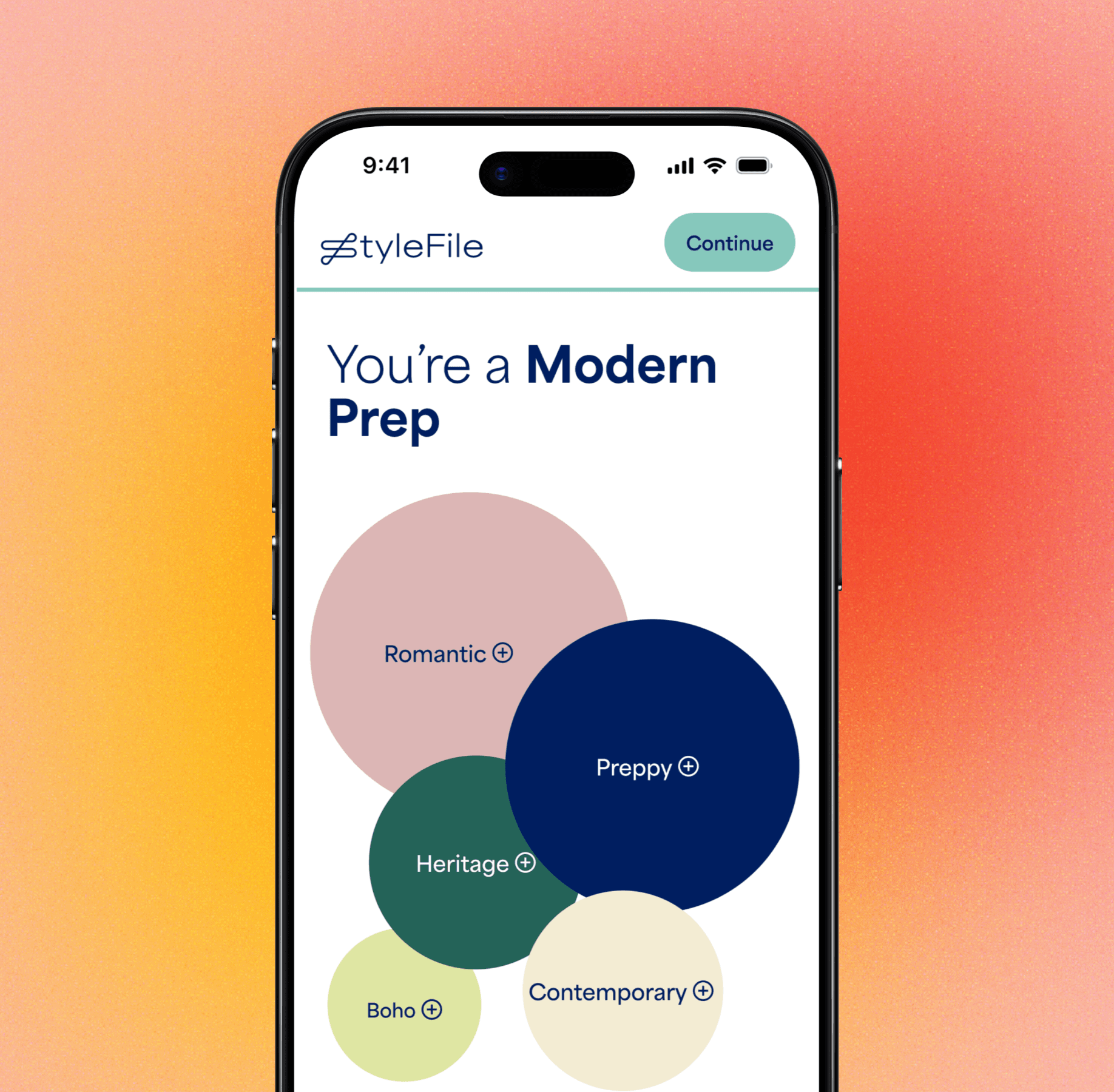

Design explorations and feedback loops with our executive team helped refine the final experience

I designed multiple design approached, iterated rapidly over several weeks, and used each review cycle to sharpen both the vision and the execution.

I also led alignment with cross functional teams and partners, like engineering and marketing. Marketing had early interest in featuring this as part of a future campaign, so I ensured they were consistently informed and incorporated their perspective into the design process.

These collaborative loops helped us converge on an experience that was both compelling for users and well-positioned for launch.

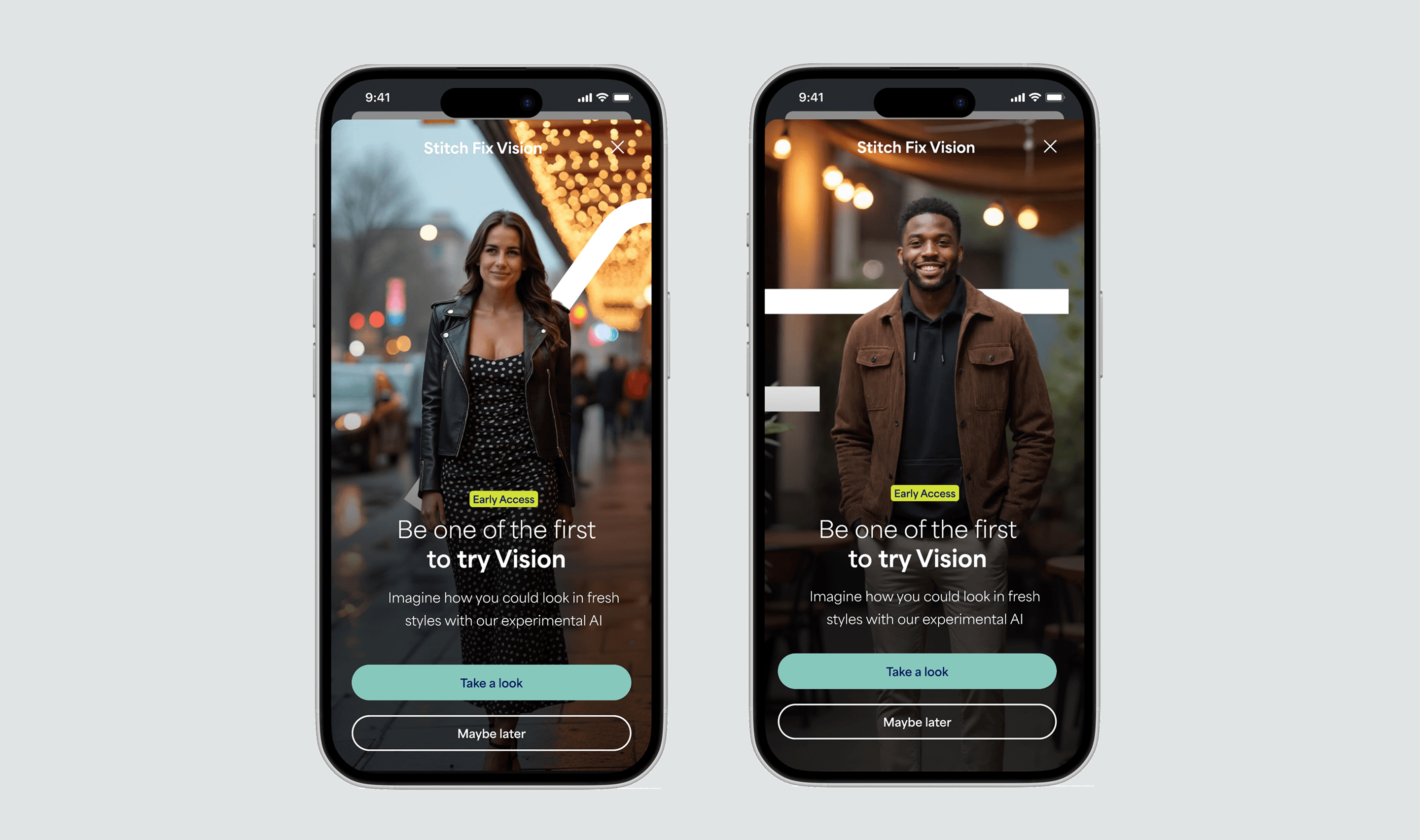

Designing the details: The final Vision onboarding experience walks the client through step by step

Vision requires photo uploads—a moment where clients often hesitate, so we used a friendly approachable flow to guide each step, showing examples, and explaining exactly how their photos will be used.

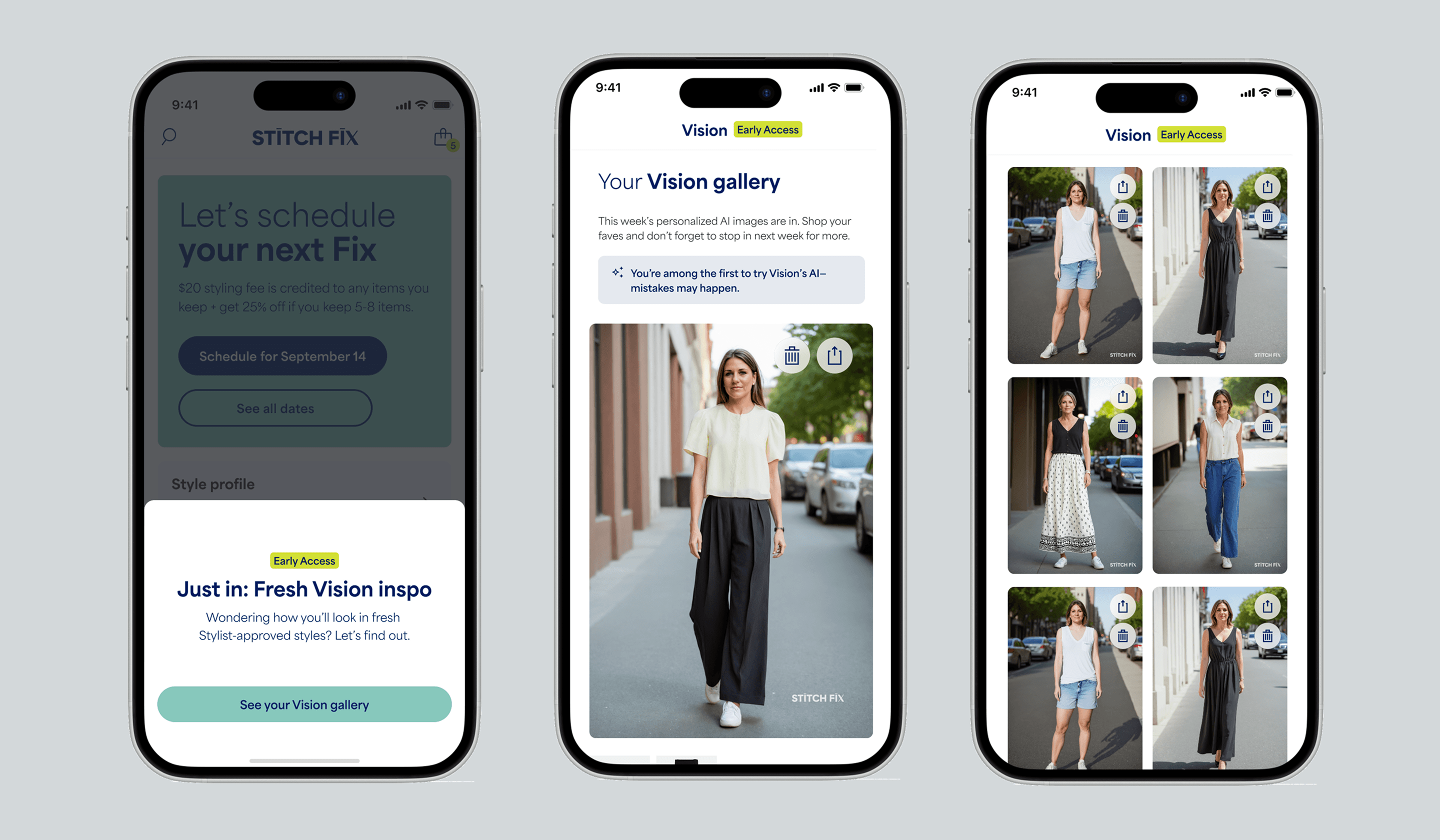

Vision Gallery: Images intentionally drop weekly, building anticipation and making each batch feel intentional

The weekly drop became a foundational part of the Vision experience.

By pacing new images as curated, time-based releases, we created a reason for clients to come back regularly and made each set feel meaningful—not just more instant content.

This approach also reinforces Vision’s differentiated value: it evolves over time, and users get to see that progress.

Post-launch, we are seeing revenue from Vision clients almost doubling! This validates that a predictable reveal cadence can meaningfully increase revisit behavior and purchase behavior.

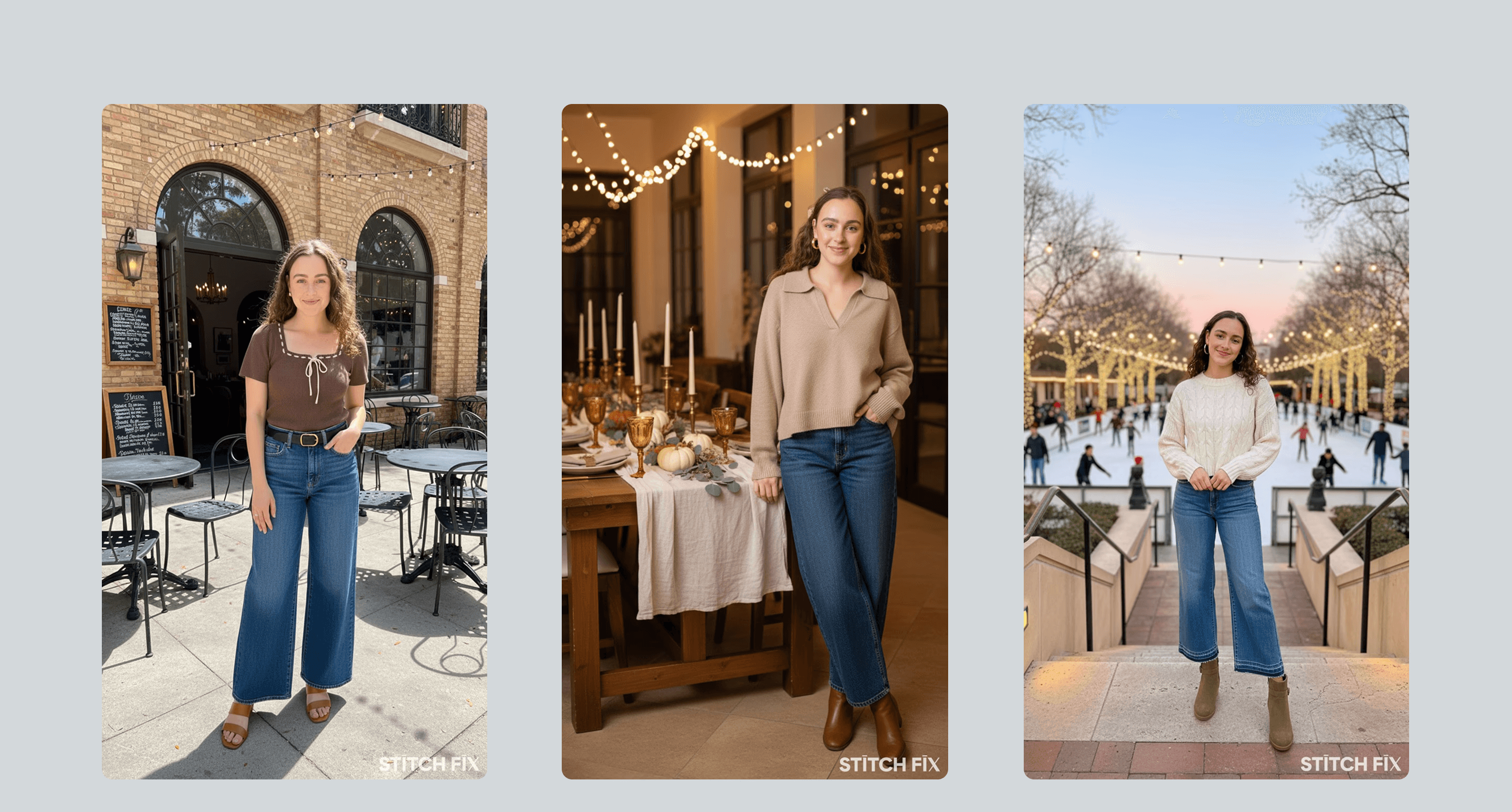

And, we've continued to improve image quality, as AI capabilities improve.

You can see how the quality has changed over time from these examples from my own Vision drops!